Introduction: Why “Lane Assist” Not “Guardrails”

When we talk about AI usage, the language we use matters. The term “AI guardrails” has become popular, but it misses something fundamental about how AI should work in practice.

Guardrails are rigid barriers. They prevent you from going somewhere. They say “you can’t do this.” They’re fixed in place, reactive, and designed to stop motion when you’ve already gone too far, outside of safe road. Usually, once you hit the guardrail at high speed, there is damage to your car, you can not continue the trip and you need a towing truck.

Lane assist is dynamic guidance. It helps you stay on course while you’re moving. It gently corrects when you drift and reduce the cognitive load of driving. It works with you and for you, not against you. Most importantly, it doesn’t prevent you from changing lanes when you need to—it just makes sure you do it intentionally. You can go as fast as you want.

This distinction captures the essence of effective AI deployment: we don’t want rigid barriers that stop AI from being useful. We want intelligent systems that keep AI operating safely while preserving its power and flexibility. We want systems that can automatically adjust their level of automation based on the situation—staying autonomous when safe, escalating to human judgment when needed.

This is the story of how AI evolved in banking, and how understanding that evolution helps us build better lane assist systems.

The Evolution of AI usage

Artificial intelligence has moved from experimental technology to essential infrastructure, but many professionals struggle to understand how to use it effectively. The challenge isn’t just adopting AI—it’s knowing when to trust it, when to verify it, and how to maintain control while gaining efficiency.

This guide explores the evolution of AI through three critical phases: the deterministic computing era, the arrival of generative AI, and the mature collaboration models we now use. Understanding this journey helps us use AI wisely in high-stakes banking environments.

Before AI: The Oracle Era of Deterministic Computing

For decades, banking ran on deterministic computing—systems that delivered the same output every time for the same input. These “Oracle” systems were predictable, reliable, and trustworthy.

How traditional systems worked:

- Input data → Algorithm → Predictable output

- Same input always produces same result

- Examples: calculators, spreadsheets, databases, risk scoring models

In banking applications:

- Credit scoring: Input financial history → Get exact credit score

- Loan calculations: Input principal and rate → Calculate payment schedule

- Transaction processing: Input account details → Execute or reject transaction

- Compliance checks: Input transaction data → Pass or fail regulatory test

Key characteristics:

- Accurate: Based on precise formulas and rules

- Reliable: Consistent results every time

- Trustworthy: Banks could depend on the answer

- Fast: Accelerated what humans could do manually

Our role then: We were operators. We used computers as tools to get correct answers faster. The technology served us, and we understood exactly how it worked.

AI Arrives: The Muse—A New Paradigm

When generative AI emerged, everything changed. This wasn’t just an upgrade to existing systems—it was a fundamental shift in how technology works.

The new paradigm:

- Input → Pattern recognition → Probabilistic output

- Same input can produce different outputs

- Examples: ChatGPT, AI document analysis, predictive suggestions, automated reporting

In banking applications:

- Customer communication: AI drafts personalized responses to inquiries

- Fraud detection: AI suggests possible fraud patterns across transactions

- Document understanding: AI interprets complex loan agreements

- Risk analysis: AI proposes multiple scenarios and risk factors

Understanding Hallucinations: Not a Bug, But a Feature

Here’s the counterintuitive insight that transforms how we think about AI: hallucinations—AI generating plausible but incorrect information—aren’t just problems to solve. They’re actually the source of AI’s power.

Why hallucinations matter:

- AI doesn’t “know” facts the way databases do—it recognizes patterns

- This pattern recognition is exactly what makes it creative and valuable

- It can suggest connections no rule-based system would find

- It can identify anomalies humans might miss

- It generates possibilities rather than certainties

The fundamental trade-off:

- Oracle (traditional systems): 100% accurate within defined parameters, but limited to programmed rules

- Muse (AI systems): Creative and exploratory, but requires human verification

Our role shifts: From operator to curator. We now evaluate and refine AI suggestions rather than simply executing commands.

The Power of the Muse: Why Pattern Recognition Matters

Consider fraud detection as an example of how the Muse approach differs from traditional systems.

Oracle approach (traditional rule-based system):

- Rule: “Flag wire transfers over $100,000 to new beneficiaries”

- Catches: Only transactions matching exact programmed rules

- Misses: Sophisticated fraud that doesn’t match known patterns

Muse approach (AI pattern recognition):

- Recognizes subtle patterns: transaction timing, communication style, beneficiary behavior, account history

- Suggests: “This transaction resembles these five unusual cases from last year”

- Can identify connections that might indicate fraud

- Human investigator verifies if the pattern represents real risk or a false positive

The value proposition: AI’s “hallucinations”—its pattern-matching capability—finds non-obvious connections. This is valuable even when sometimes wrong, as long as we verify before taking action.

Banking applications where the Muse excels:

- Drafting customer communications with appropriate tone and context

- Exploring “what-if” scenarios in credit risk modeling

- Identifying unusual transaction patterns for fraud review

- Generating executive summaries from complex financial reports

- Analyzing unstructured data in loan applications

Remember: The Muse gives you possibilities to explore. The Oracle gives you answers to trust. Both have their place.

AI Matures: Understanding the GUSPATO Collaboration Model

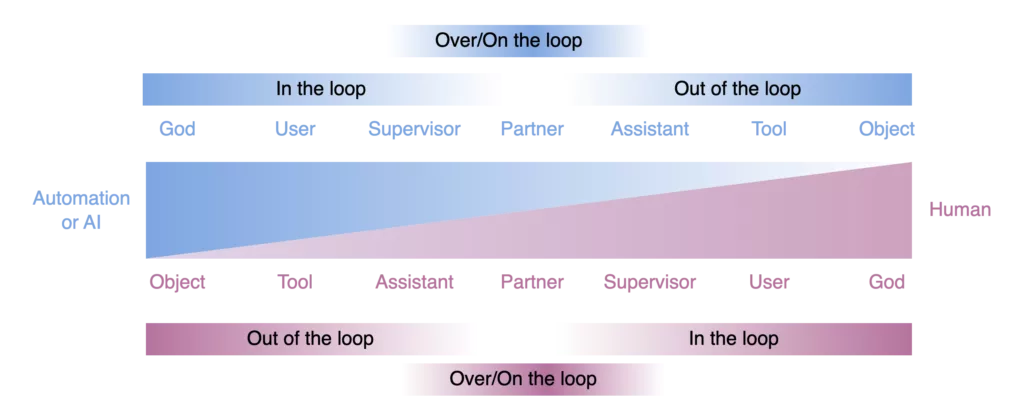

As organizations gained experience with AI, researchers identified seven distinct ways humans and AI can collaborate. The GUSPATO model provides a framework for choosing the right interaction mode.

The Seven Collaboration Levels

| Level | Name | Control | Best For |

|---|---|---|---|

| G | God | You create system rules | Building automation parameters |

| U | User | You use tool manually | Complex judgment calls |

| S | Supervisor | You oversee AI operations | AI recommends, you approve |

| P | Partner | Shared control | Balanced collaboration |

| A | Assistant | AI monitors your work | System requests your input |

| T | Tool | System uses you | You verify AI’s requests |

| O | Object | Full automation | Routine, low-risk operations |

Three States of Human Involvement

IN the Loop (G/U levels):

- You make every decision

- Full human control and oversight

- Example: Evaluating a complex commercial loan application

ON the Loop (S/P levels):

- AI operates, you supervise and can intervene

- You monitor key decisions and outcomes

- Example: AI flags suspicious transactions, you investigate

OUT of the Loop (A/T/O levels):

- AI operates autonomously

- You audit periodically

- Example: Automated account balance notifications

The Key Principle

Match your interaction mode to the task’s requirements:

- High stakes, complex decisions → Stay IN the loop (G/U/S)

- Medium complexity, manageable risk → Stay ON the loop (S/P)

- Routine operations, low risk → Can go OUT of the loop (A/T/O)

Reality Check: We Can’t Delegate Blindly

Banking needs AI’s speed and cost efficiency, but we face a critical tension: we can’t blindly delegate high-stakes financial decisions to systems that operate probabilistically.

Why Full Automation Isn’t Possible

1. AI makes confident mistakes

- Generates plausible but incorrect information

- Example: AI invents a regulation citation that sounds official but doesn’t exist

- Traditional systems would error; AI creates something believable

2. High-stakes decisions require human judgment

- Large credit decisions with complex risk factors

- Sensitive customer situations requiring empathy

- Legal and regulatory implications

- Novel situations not represented in training data

3. AI fails silently—“strong, silent, and wrong”

- No error messages like traditional systems

- You might not know something went wrong until significant damage occurs

- Confidence scores don’t always reflect actual reliability

4. Skill erosion threatens institutional knowledge

- If AI handles everything, how do employees develop expertise?

- Junior analysts need hands-on experience with real cases

- Competency comes from doing, not just reviewing AI output

5. Loss of situational awareness impairs intervention

- Being OUT of the loop makes taking control difficult

- You lose the context needed to make informed decisions

- When crises emerge, you’re not prepared to respond effectively

The Central Question

How do we capture AI’s speed and cost benefits while maintaining safety, control, and human expertise?

The answer: A layered approach with built-in degradation capabilities.

The Solution: Layered Defense with Degradation

The key to effective AI use in banking is running systems autonomously for efficiency while maintaining the ability to degrade to lower automation levels when situations demand human judgment.

Core Principles

1. Multiple layers of defense

- Start with full automation for routine tasks (OUT of loop)

- Each layer can escalate to the next level of human involvement

- Human expertise serves as the final layer (IN the loop)

- Progressive safeguards catch issues at appropriate levels

2. Degradation capability

- Systems automatically move from OUT → ON → IN the loop based on risk signals

- AI detects uncertainty or anomalies and escalates

- Humans can manually take control at any time

- No single point of failure

3. Feedback loops are critical

- Human decisions and discoveries feed back to improve AI models

- AI learns from escalations and errors

- Each layer enhances the others through shared intelligence

- Continuous learning creates a smarter overall system

4. Always maintain capability for full human control

- Never lose the ability to operate manually

- Staff can be put ON the loop at any moment

- Regular training maintains intervention capability

- “Use it or lose it” applies to critical skills

The Benefits

- Efficiency: Automation handles high volume

- Safety: Humans oversee critical decisions

- Learning: System improves from feedback

- Flexibility: Right level of control for each situation

- Resilience: Can degrade gracefully under stress

Real-World Example: Layered Fraud Detection

Here’s how a major financial institution implements the layered approach for fraud detection across retail banking operations.

Layer 1: Automated Screening

- Mode: OUT of the loop (GUSPATO Level: O)

- Function: AI screens all transactions in real-time

- Speed: Processes millions of transactions daily

- Escalation trigger: Suspicious patterns or unusual behavior detected

Layer 2: Digital Forensics

- Mode: ON the loop (GUSPATO Level: S/P)

- Function: Specialized tools analyze flagged transactions for manipulation

- Human role: Analysts supervise findings and verify anomalies

- Escalation trigger: Complex patterns requiring deeper investigation

Layer 3: Behavioral Analytics

- Mode: ON the loop (GUSPATO Level: P)

- Function: Cross-account analysis, pattern recognition across customer base

- Human role: Interpret context, assess significance, consider customer history

- Escalation trigger: Patterns suggesting organized fraud or need expert investigation

Layer 4: Fraud Investigation Team

- Mode: IN the loop (GUSPATO Level: U/S)

- Function: Experienced investigators with AI-assisted tools

- Human role: Final judgment on complex cases, customer contact, legal action

- Decision authority: Human makes the final call

The Feedback Loop in Action

- Layer 1 flags cases → Layer 2 analyzes evidence

- Layer 2 findings → Improve Layer 3 models and detection rules

- Layer 3 patterns → Inform Layer 4 investigation priorities

- Layer 4 discoveries → Update Layer 1 algorithms and rules

Degradation in Action

The system starts at Layer 1 (autonomous, fast, cost-effective) and automatically escalates based on confidence levels and risk indicators. Each layer adds human involvement and judgment. The system can degrade all the way to full human control at Layer 4 when needed.

Critical insight: As case complexity and stakes increase, human involvement increases proportionally. This balance enables both efficiency and safety.

Implementing Your Own Layered Approach

Here’s how to design layered AI systems for your banking operations.

Layer 1: Full Automation (OUT of the Loop)

- Best for: Routine, low-risk tasks

- Characteristics: High-confidence AI decisions, clear rules and patterns

- Examples: Account balance notifications, transaction confirmations, standard data entry, routine status updates

Layer 2: AI with Oversight (ON the Loop)

- Best for: Medium complexity tasks

- Characteristics: AI handles operations, human monitors dashboards

- Examples: Standard loan processing, document categorization, compliance screening, customer service routing

Layer 3: Collaborative Analysis (ON the Loop)

- Best for: Shared decision-making scenarios

- Characteristics: AI provides insights, human interprets and applies context

- Examples: Credit risk assessment, fraud pattern investigation, portfolio analysis, regulatory reporting

Layer 4: Human Expertise (IN the Loop)

- Best for: High-stakes, complex cases

- Characteristics: AI assists with data and analysis, human makes final decision

- Examples: Large commercial loans, sensitive complaints, legal matters, major account closures

Designing Escalation Triggers

Your system should automatically escalate when it encounters:

- Low confidence scores: AI uncertainty below defined threshold

- Unusual patterns: Situations not well-represented in training data

- High stakes: Financial or reputational risk above tolerance

- Customer distress: Upset customers or sensitive situations

- Conflicting signals: Multiple data sources providing contradictory information

- Legal implications: Potential regulatory or compliance issues

- Manual override: Employee or customer requests human review

Implementing Feedback Loops

Make your system smarter over time:

- Document all escalations with reasons and context

- Capture human decisions and the reasoning behind them

- Regular pattern reviews to identify systemic issues

- Model refinement cycles based on real-world performance

- Training updates incorporating new case types and scenarios

Oracle vs. Muse: A Decision Guide

Knowing which technology to use for each task is fundamental to effective AI implementation.

Use ORACLE (Deterministic/Traditional) When:

- You need exact, verifiable answers

- Performing calculations and data retrieval

- Running regulatory compliance checks

- Calculating loan terms and payment schedules

- Processing standard transactions

- Any situation where “correct” matters more than “creative”

Use MUSE (Generative AI) When:

- Drafting customer communications

- Exploring scenarios and possibilities

- Recognizing patterns in complex data

- Creative problem-solving

- Generating options for human evaluation

- BUT ALWAYS VERIFY before using in production

The Hybrid Approach (Best Practice)

Combine both technologies strategically:

- Use Oracle for calculations → Use Muse to explain results to customers

- Use Muse to draft communications → Use Oracle to verify policy terms

- Use Oracle for compliance → Use Muse to identify edge cases

- Use Muse to find patterns → Use Oracle to validate with hard data

Red Flags: Degrade to Human Control When:

- Output seems plausible but cannot be verified

- High-stakes decision with major financial consequences

- Novel situation outside AI’s training domain

- Customer shows distress or situation is sensitive

- System behavior is unexpected or unexplainable

- Legal or regulatory implications are present

Maintaining Human Capability: Don’t Lose Control

The most critical risk in AI adoption is losing the institutional capability to intervene when automated systems fail or encounter novel situations.

The Risk of Over-Automation

If AI handles everything, employees lose the skills and knowledge needed to take control during crises. This creates dangerous dependency.

The Solution: Deliberate Capability Maintenance

1. Structured training and development

- Junior staff rotate through manual processes

- Regular skill assessments on core competencies

- Understanding fundamentals, not just AI outputs

- Practice scenarios for taking control

2. “Proper paranoia” as cultural norm

- Stay mentally prepared to intervene

- Maintain clear understanding of what “right” looks like

- Recognize anomalies quickly

- Never become complacent about automation

3. Regular manual reviews and audits

- Periodically audit automated processes

- Sample cases handled fully by AI

- Verify outputs remain accurate over time

- Catch model drift before it causes problems

4. Clear override procedures

- Anyone can escalate when something seems wrong

- Easy-to-use manual intervention mechanisms

- No organizational penalty for appropriate caution

- Document and learn from all overrides

5. Maintain situational awareness

- Even when OUT of the loop, stay informed

- Understand what AI is doing and why

- Review escalation reports regularly

- Keep context for when you need to assume control

The Goal

The objective isn’t eliminating humans from banking operations. It’s positioning them where they add the most value: judgment, contextual understanding, empathy, and handling novel situations that AI cannot manage.

Key Takeaways: What to Remember

1. Two Technologies, Two Purposes

- Oracle (traditional): Deterministic, trustworthy answers for calculations and rules

- Muse (generative AI): Pattern-based, creative suggestions requiring verification

- Hallucinations enable AI’s creative power—they’re not just problems

- Use the right tool for the right job

2. Collaboration Models Matter

- GUSPATO framework: Seven levels from full human control to full automation

- IN/ON/OUT of the loop: Match involvement level to task criticality

- Different objectives require different interaction modes

- Flexibility in collaboration improves both efficiency and safety

3. Speed + Safety = Layered Approach

- Start with automation for efficiency

- Build in degradation capability for safety

- Multiple layers from autonomous operation to human expertise

- System can escalate or degrade based on situation

4. Feedback Loops Are Essential

- Human discoveries improve AI performance

- AI insights guide human priorities and focus

- Creates a continuous learning system

- Each layer contributes intelligence to the others

5. Never Lose Human Capability

- Maintain skills and knowledge to take control

- Train regularly for intervention scenarios

- Practice “proper paranoia” about automation

- Humans handle what AI cannot—judgment, empathy, novel situations

The balance: Leverage AI for speed and cost efficiency, but keep humans in command for judgment and situations requiring contextual understanding.

Your Path Forward: Practical Next Steps

For Your Daily Work

1. Identify your current layers

- Where are you using AI today?

- What level of automation is it operating at?

- Can you escalate effectively when needed?

2. Design degradation paths

- For each AI application, ask: “How do we take control?”

- Define clear escalation triggers

- Document override procedures and test them

3. Implement feedback loops

- Capture insights from human escalations

- Feed discoveries back to improve AI models

- Establish regular review cycles

4. Maintain your expertise

- Don’t let AI make you passive

- Stay curious about why AI suggests what it does

- Keep core banking skills sharp through practice

5. Remember the evolution

- Oracle → Trustworthy answers for defined problems

- Muse → Creative possibilities (always verify!)

- GUSPATO → Right collaboration mode for each task

- Layers → Speed and safety working together

Conclusion: The Future of AI in Banking

The banking industry’s AI journey continues to evolve. Success doesn’t come from choosing between human judgment and artificial intelligence—it comes from understanding how to combine them effectively.

The Oracle era taught us to trust deterministic systems. The Muse era is teaching us to collaborate with probabilistic intelligence. The mature phase we’re entering now demands that we build systems that capture AI’s efficiency while preserving human oversight, judgment, and the ability to intervene.

Financial institutions that master this layered approach—automating where appropriate while maintaining degradation capability—will deliver both operational excellence and risk management. Those that automate blindly will face unexpected failures. Those that resist automation will face competitive disadvantage.

The path forward is clear: embrace AI’s power while respecting its limitations, build systems that can gracefully degrade under stress, and never lose the human capabilities that make sound banking possible.

Leave a Reply