Show me at least one domain that does not think to or already have integrated AI into it. But let’s step back and analyse what types of interactions can we have between a human and automation(or AI).

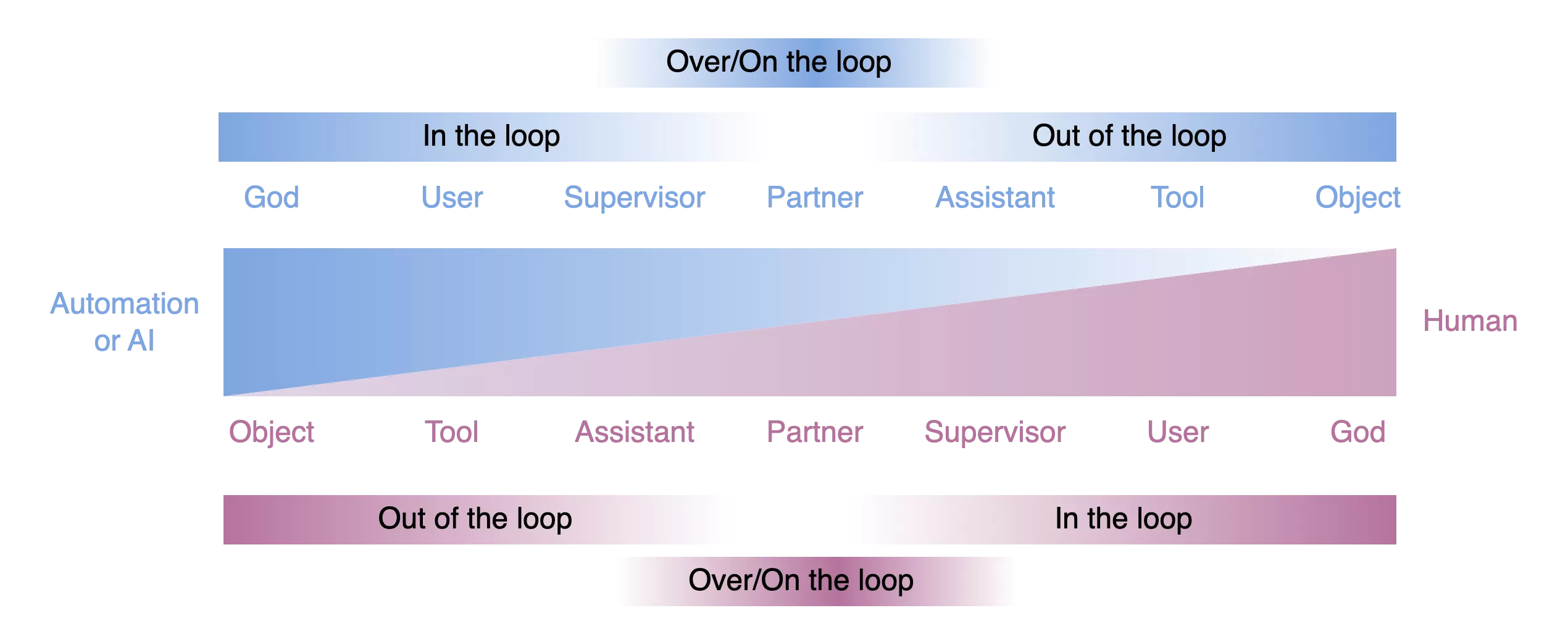

The ways in which humans can collaborate with automation is presented using the GUSPATO model. GUSPATO is the acronym composed with the first letter of the seven types of collaboration where control, authority and responsibility (according to the terminology of the RCRAFT framework for automation of Bouzekri et al., 2021) migrate between the technical system embedding automation and the human.

Each position on the spectrum corresponds to one type of collaboration with automation.

The first describes a collaboration where the human is seen as a ‘god’ and creates the system and its outcome. In that case the system can be seen as an object belonging to the creator.

The second interaction represents the classical use of computers where the system is seen as a tool used by the user or operator. The tool may embed some automation but the control, the authority and the responsibility remain with the user/operator. The human is, here, inside the interaction loop perceiving information provided by the system, cognitively processing it and triggering system functions when appropriate.

Interaction three shows an unbalanced sharing of control, authority and responsibility between the system (as assistant) and the human (as supervisor). Automation is more complex, and more complex tasks are performed by the system, following a delegation of tasks by the human. The human still holds control, authority and responsibility but positioned over the interaction loop (monitoring the partly autonomous behaviour of the system).

Interaction four in the middle of the figure corresponds to a symmetric relationship for control, authority and responsibility between the system and the human. In this type of collaboration, both entities can delegate tasks to the other entity and monitor their performance. Authority and responsibility are shared, and the human can be considered as outside of the interaction loop when the systems perform tasks autonomously.

Interaction five corresponds to reversal of the collaboration presented in interaction three but now the human is an assistant to the system. In that context the system might require the human to perform tasks and will monitor the performance of the human. Such reversal of roles in the collaboration is similar for the last two interactions of the figure.

The last one corresponds, for instance, to generative AI where objects are created by the system and the human is an object amongst many others.

In, over and out of the loop

In his 1936 black and white part-talkie film Modern Times, Charlie Chaplin is struggling to stay in the loop with the new automation installed at the factory. He is constantly thrown out of the loop, and in some cases remains in the loop, in some respects, even after walking away from the production line. He is like an air traffic controller who continues to play the traffic in her head after the shift is over to figure out what could have been done better. It seems that not much has changed since the early introduction of automation. One small change did happen: Charlie Chaplin didn’t have the experience of being on the loop.

We talk about being in the loop when we have direct control of the machine. Driving a manual transmission car in busy stop-and-go traffic during rush hour in the city keeps us in the loop of controlling the car. Executing a series of aerobatic manoeuvres in a 1945 Pitts Special keeps us in the loop of controlling the biplane. And constantly giving takeoff and landing clearances at a busy airport keeps us in the loop of controlling the traffic. We know exactly where every aircraft is and where we want it to go, and we keep monitoring to make sure it gets there.

We talk about being out of the loop when we have no control of the machine, or even when we have no feedback on how the automated controller is managing the machine. We fill the coffee maker’s reservoir with water, make sure there are coffee beans in the hopper, place a cup Charlie Chaplin in Modern Times (AI generated) under the brewing head, set the timer to have a cup of coffee ready when we wake up the next morning, and go off to bed for the night’s sleep. We have no direct control of the machine’s internal algorithm.

Hopefully, the smell of fresh coffee drifts into the bedroom just as the alarm clock goes off to wake us up. We have been sleeping peacefully out of the coffee-making loop all night. Some Tesla drivers have been sleeping peacefully out of the car-driving loop travelling at a constant distance behind the car in front of them, according to the setting of their adaptive cruise control, while their lane-centring algorithm gently follows the curves in the road, careful not to wake them up.

Similarly, airline dispatchers increasingly rely on neural network or deep learning programs that spit out flight plans based on optimal winds, aircraft weight, temperature, traffic density, landing slot time, crew duty periods, passenger connection times, and many other variables. This is mostly opaque to the dispatcher and places them out of the loop.

When we know how to work the machine, staying in the loop is easy (if the machine is designed to allow us to do that), and even desired to reduce the cognitive load.

We talk about being on the loop when we assume a supervisory role, when our only job is to make sure the automation does what it was designed to do. Of course, there is a minor catch to that seeming luxury. If the automation fails in some way, we need to be able to jump in and intervene so the operation can continue to flow smoothly.

Imagine having to supervise the coffee maker through the night. Chances are, you won’t get much sleep. If the coffee-making machine was designed to be supervised, it would have to have some indication letting you know what state it is in. You would have to be trained to know the precise sequences of states it can go through in the process of making the coffee so you’d be able to predict when it is likely to transition to the next state and under what conditions it might fail to do so. You might be able to set your alarm clock to wake you up just before a transition takes place so you can confirm that it did it right. You might even suggest to the designers of the coffee maker to integrate such an alarm clock into the design of the coffee maker.

The aviation research literature is filled with discussions of ‘automation surprises’. These are situations in which the automation behaves in ways the operator – or rather, supervisor – did not expect and did not anticipate. From the early days of aviation’s advanced automation through the mid-90s and all the way to the very present, we continue to struggle with the supervisory role. In particular, we struggle with the timely transition from being on the loop to being in the loop. Dekker and Woods (2024) describe some automation as being “strong, silent, and wrong”. Their work is an important call for designers to at least eliminate the “silent” part, if they design the automation to be strong, recognising that we can’t eliminate all the possibilities of the automation being wrong. It is exactly when the machine is silent that we don’t know when it’s time for us to intervene.

Tradeoffs are criticality dependent

Depending on criticality, we need our technical systems not to be silent. We want clear indications of states, of impending transitions, and of any situations in which the conditions necessary for such transitions do not obtain. We’d like our systems to ‘communicate their intentions’ so we know what to expect and are not surprised.

On the human’s side, in the absence of better technical systems design – we must invest in good training and good support materials that enable humans to understand their systems, including the logic and rationale of their design. This should help humans to know which machine state is the right state for a given object and context, and to be prepared to jump in on short notice if anything looks out of place. To be able to do that, one must know what’s the right place for every piece of the socio-technical system picture. And one must maintain a state of mind I call ‘proper paranoia’, recognising that despite the very high reliability of the machines and automated systems we work with, they may behave in an unexpected way any minute and with little, if any, warning.

Only people can handle gaps in technical systems

Technical systems tend to be very good at doing what they are told to do, in a predictable way. But many situations cannot be foreseen, and solutions cannot be programmed or prescribed, so our human ability to adapt is very valuable. With my examples, I am claiming that our systems are far from being good enough to be managed without the human contribution.

Having the human in the loop is very often the best risk mitigation we have. Of course, recruitment and selection must be done properly. And people need to be provided with quality training and continuous information, and have sufficient experience.

But given this, when humans confront a new, unforeseen situation, we are often creative enough to invent a way to solve it. Every day, perhaps every second, humans are filling the gaps in the system. And most of the time, we see that as a normal part of what we do; nothing extraordinary, and nothing requiring a report. But as a result, we lack the statistics to demonstrate that most system flaws are mitigated by the human in the loop. So we must make more time to recognise and understand the human contribution, and better support the humans in the loop so that people stay in control.

As the accident record shows, the space between in the loop and out of the loop is fertile ground for us all to better ourselves and our systems for the sake of safety and quality.

References: https://skybrary.aero/articles/hindsight-36

Leave a Reply